Work-Bench Snapshot: Augmenting Streaming and Batch Processing Workflows

The Work-Bench Snapshot Series explores the top people, blogs, videos, and more, shaping the enterprise on a particular topic we’re looking at from an investment standpoint.

There are two ongoing, but divergent trends afoot in enterprise software that are taking up a lot of airspace for companies building in Applied AI. Both are catalyzed by the emergence of Large Language Models (LLMs), and while these trends might not be so different from how software companies have historically been founded, given that all eyes are on AI, they feel more palpable today than ever before:

The divergence in these two use cases boils down to LLMs and its power to democratize access to AI and machine learning capabilities. LLMs are turning the traditional applied AI market on its head. While full stack machine learning was once a competitive advantage for teams of highly trained PhDs, it is now just an API call away.

For example, back when I was a Product Manager at Hyperscience, it took us years to acquire the raw training data needed to build proprietary models that beat out traditional optical character recognition (OCR) techniques for document extraction. Today, generally available LLMs and other task specific models can abstract away the need to spend significant time and money building out these workflows all out of the box.

As a result, every company is now an AI company, fostering a new ethos in how products are built.

Startups are building AI-native products from the get-go in hopes of either disrupting traditional incumbents with more powerful and productive software, or leveraging AI/ML to build products for net-new use cases once overlooked for being too technically challenging. As a result, we’re seeing products that unearth new experiences and modalities for human: machine interaction.

But even as many companies across both categories have captivated users and quickly grown in popularity, not all that shimmers is gold:

As the price to develop and integrate applications with AI/ML drops, so do the barriers to entry, leading to a sea of competitors across both existing and net new use cases.

While we already knew that no startup idea is truly unique, we’re seeing an influx of founders scanning the market to find use cases they can attach AI capabilities to. To this extent, we’re seeing a concentration of companies emerge across the different functions of sales, marketing, code generation, API generation, video generation, and many more use cases. Not to say these companies won’t be successful, but founders need to be aware of the number of new entrants trying to capitalize on the same AI paradigm shift.

In the past generation of SaaS companies, those that entered competitive markets aimed to differentiate from the pack by expanding their product surface area to own more of the workflow or by creating a unique GTM motion:

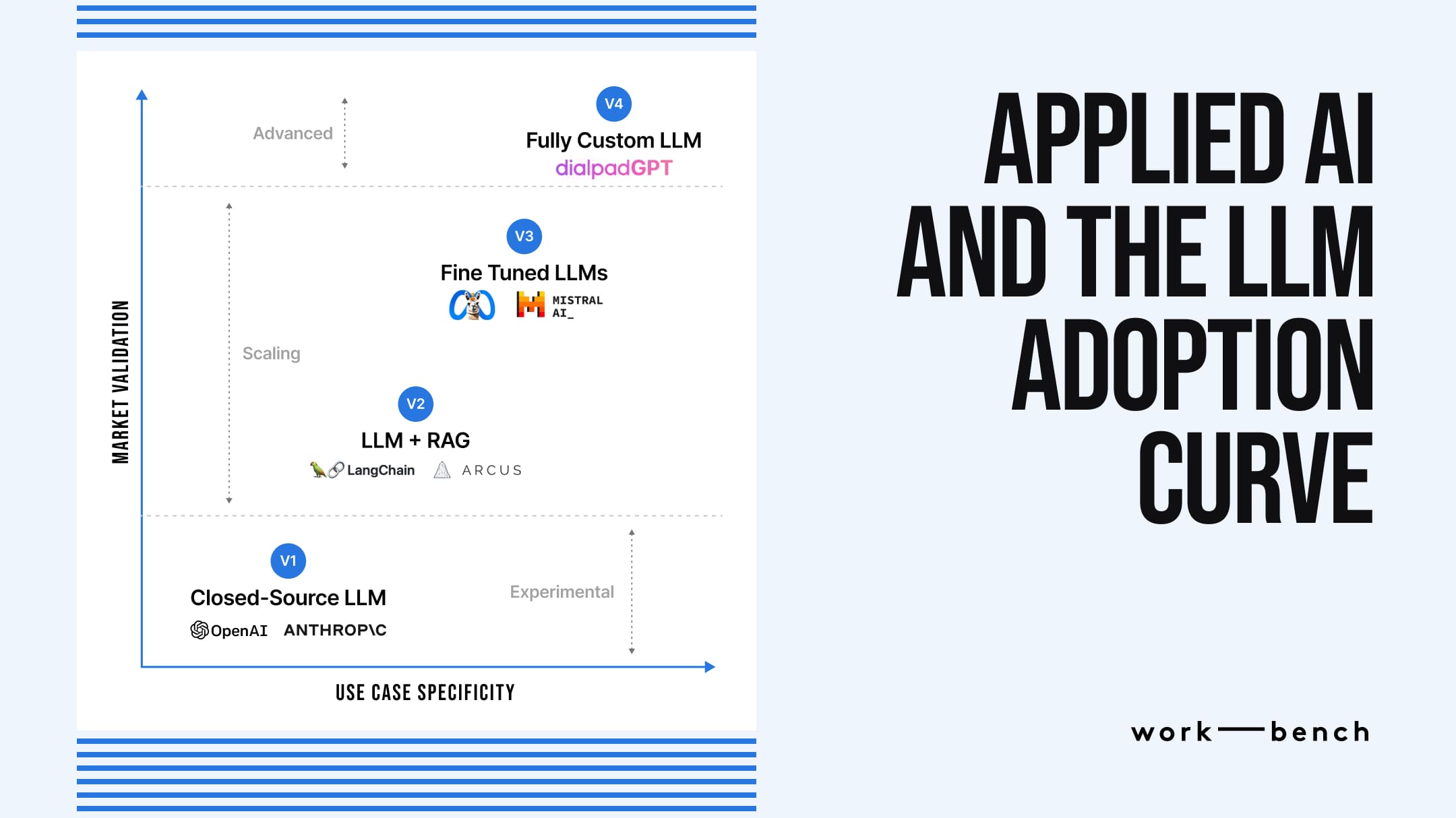

However, today, many AI-native companies are taking a different route: they’re going after niche wedges in big markets, seeking to capture key customer data that will over time help improve their product’s value as they aim to automate more and more of the customer’s workflow and show customers more value relative to the competition. On top of that, many AI-native companies are aiming to differentiate on the model level by leveraging techniques like Retrieval Augmented generation (RAG), finetuning models with specific datasets, or by daisy chaining LLMs & SLMs together for a specific business need.

The only problem? It’s almost exactly what every AI-native company I meet brings up: start with accessible models, add customer data to refine model outputs, and over time acquire enough data through your unique workflow to create your own model, and finally have true competitive advantage by owning the data layer.

To that point, every applied AI company falls somewhere on what I’m calling the LLM Adoption Curve:

While the above looks like a great strategy in theory, in practice the competition is heating up and sees a similar future for their products.

To determine how to truly differentiate AI companies from the growing herd, here are a few key questions I’m thinking about:

As we continue to dig into these questions, we’re eager to speak with more founders, practitioners, and builders in the category. And if you’re an early stage startup building in the future of AI, I’d love to hear from you!