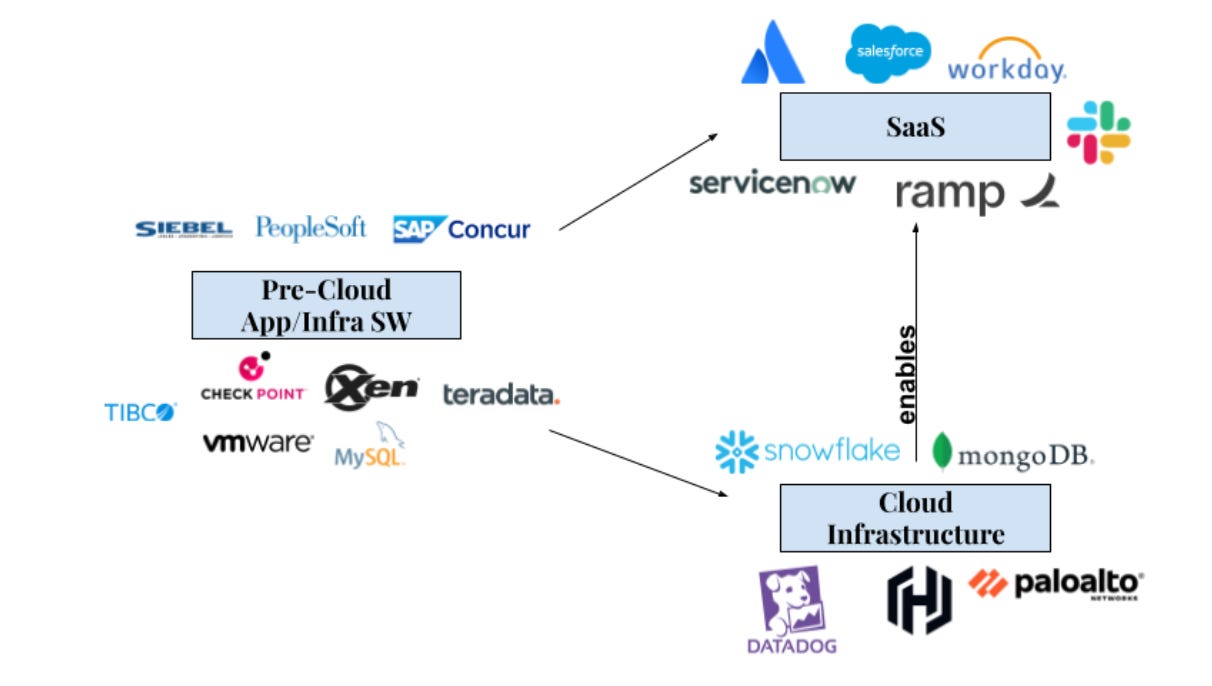

Software infrastructure in the age of AI has been an interesting space to spend time in. The on-prem to cloud transition followed a very linear pattern: categories that were dominated by an on-prem incumbent would see a cloud-native upstart disrupt the market. The mapping wasn’t necessarily 1:1, but it was close. George Kurtz left McAfee to start Crowdstrike. Nir Zuk left Check Point to start Palo Alto Networks. People who had used Symantec for years became familiar with Rubrik. These infrastructure platforms became building blocks for software-as-a-service applications to run at scale. It became possible to solve old problems in new ways, and the explosion of infrastructure made it radically easier for companies at the application layer to get started and to scale with less levels of friction. Infrastructure enables applications, applications at the frontier run into technical problems that demand new infra, infrastructure gets even better to enable new applications, etc. It’s a symbiotic cycle that has been so core to the last few decades of enterprise software.

And without a doubt, that cycle is continuing. But there’s a twist now. AI security doesn’t just mean underlying security platforms that let enterprises run AI workloads securely at scale…it also means companies that can tackle existing security problems with LLMs in ways never conceivable before. Same with observability: while the natural mapping of the application/infrastructure monitoring space (think Datadog, AppD, New Relic) to the AI era looks like LLM monitoring/evals (which our portfolio company Arthur is playing in!), there’s also a new crop of companies that are tackling current observability problems (that traditionally have been the domain of SREs) with LLMs (think Resolve AI, Traversal, etc).

The Internet and cloud were distribution mechanisms; Salesforce beat Siebel because their product was accessible via the Internet at a lower pricepoint and the company was able to innovate and expand product scope faster, but a CRM is a CRM. A lot of the real invention for the application software companies was the SaaS business model, charging for software with a seat-based subscription versus a one-time license (and then charging for support, services, etc). If on-prem → SaaS was a scaling of an existing vector, SaaS → AI applications is the birth of a new vector altogether, given that the capabilities of the software expands beyond being a platform for doing work…the software now does the work itself. This means that the surface area that AI-native application software can enter is any realm where knowledge work is done, allowing agentic software to permeate infrastructure categories in ways SaaS applications couldn’t.

In some ways, this reshapes software for good. What do we think of a cybersecurity company harnessing agents to protect AI workloads? Is it an infrastructure software play or an application software play? Think Crowdstrike releasing their Charlotte AI agents to tackle bottlenecks in the SOC: does this represent their expansion into the application layer? Or Datadog and releasing Bits AI, a suite of autonomous agents across monitoring, development, and security. Dagster Labs also comes to mind– a company focused on data pipelines (traditionally an infrastructure category), they’ve recently released Compass, a tool where business users can ask questions in natural language within their Slack platform and get insights instantly. In the fullness of time, do infrastructure platforms need to make such evolutions (and transcend the notion of being an “infra company” itself) in order to compete? The redefinition of what it means to be a software application in the age of AI (agentic!) opens the door both for software in industries previously resistant to it (see Legora and Harvey in legal, Basis in accounting, etc), and also vectors along which infrastructure players can move up the stack.

But there’s something else going on here. It’s not just infrastructure becoming applications; current applications are simultaneously becoming infrastructure for the next generation of agents. As Bret Taylor has recently said, when asked if current SaaS markets like ERP and CRM will be fully disrupted by agentic AI:

“If you look at software-as-a-service simply, it really is a database in the cloud with workflows on top. What agents will end up doing is handling those workflows. I haven’t done a lot of work on ERP systems, but imagine procurement processes, contracting, and all the steps that make them up—and then an Ernst & Young auditor using the system to review your financials for a quarterly earnings report. How much of that will eventually be done by AI agents? And then: what happens to the value of the platform when the forms and fields in the web browser are used much less? It’s not nothing. The ledger—the balanced books themselves—are still incredibly valuable.”

In a time (not too far out in the future) when agents are owning a number of workflows that constitutes knowledge work, the substrate upon which those agents will be running is what we think of SaaS today (this will be part of the context and data upon which they act accordingly). Agents may relate to SaaS as SaaS relates to infrastructure. Call it “applications for agents” or call it infrastructure, but to an agent, it’s likely that they will appear as one and the same. Gokul Rajaram was prescient in talking about this more than a year ago, around how the lack of a software UI/UX need for agents essentially renders SaaS and their underlying primitives equal.

From the human point of view, infrastructure companies are becoming application companies with their building of agents. From an agent’s point of view, software infrastructure and SaaS are essentially converging into one and the same, as building blocks and contextual ingredients that they harness to operate at scale. For all companies involved, two user personas are of utmost importance: humans and agents.

I hypothesize a barbelling here: software optimized for human users will slowly converge into agentic software (doesn’t matter the layer of the stack occupied pre-2025). Agents will strictly dominate traditional SaaS as a more optimal way to interface with a business user (why would a business user choose a platform to do a workflow over a platform that does the workflow?), and current infrastructure players will take this market expansion opportunity in stride en route to “becoming agentic” We’re even seeing LLMs used to optimize LLMs: for example, Cognition uses a context compression LLM to optimize memory for the agents they build. At the same time, especially as agents take off, designing systems in accordance with their preferences will slowly become a priority, with applications potentially shifting more and more to accommodate this as well. It’s an innovator’s dilemma case which requires loads and loads of humility, to acknowledge that a) in the steady state, many of these workflows that rest on SaaS platforms will be absorbed by agentic systems b) as great as a SaaS platform can make their agent, the market will be inherently oligopolistic, hence the value in building MCP servers to allow external agents to use their software (with some economic transaction embedded within).

And building for agents will require a mental shift! Guillermo Rauch from Vercel shared some interesting insight around how agent preferences diverge from human preferences in a recent interview:

“Today if you write a documentation website, you’re putting a lot of navigation and pixels, and that’s great for humans—you still want to have that—but AIs just need raw signal. There’s an emerging convention: HTML for humans and .txt, literally .txt, like the 1950s BBS and Unix terminals [for agents]. LLM.txt or raw markdown is better for agents. If you’re building developer tools today, you have to have that duality in your head. You need to think: how do I make my content, my errors, my developer tools great for agents? What’s neat is I’ve always been a geek of the Unix days and command line interfaces and terminals, and those things are actually amazing for agents.”

There’s certainly a barbelling that is happening here, when thinking about software designed for humans versus software designed for LLMs/agents. But at both extremes of the distribution, there are convergences, with players equilibrating at similar points to optimally serve the customer at hand. Excited to see how this plays out! If you’re building for the next generation of software and thinking about similar things, please reach out - I’m at proby@work-bench.com.